Apple Maps on iOS 11 beta 2 features a great new virtual reality (VR) mode that takes advantage of Apple's new ARKit framework to let you move around in 3D by walking.

This unapologetically cool feature seems to be tied to Flyover, which replaces satellite imagery with three-dimensional buildings, landmarks and other points of interest.

The new VR mode on iOS 11 Maps was highlighted yesterday by Twitter user @StijnDV, but it appears to have been originally discovered by Tweetbot developer Paul Haddad on Wednesday.

To try it out yourself, open Maps on iOS 11 beta 2, switch to 3D mode by tapping “3D”, then use the search field at the bottom to find a place that has Flyover.

On the place card, tap the Flyover button and move the device around to rotate the view. Better still, why don't you actually move forward, backward or side to side to explore the map in VR?

Mind blown.

So, how do we know this nifty feature actually uses ARKit? Because it displays a message when you cover the camera, just like any ARKit-powered app does, saying you should aim the device at a different surface because “more contrast is required”.

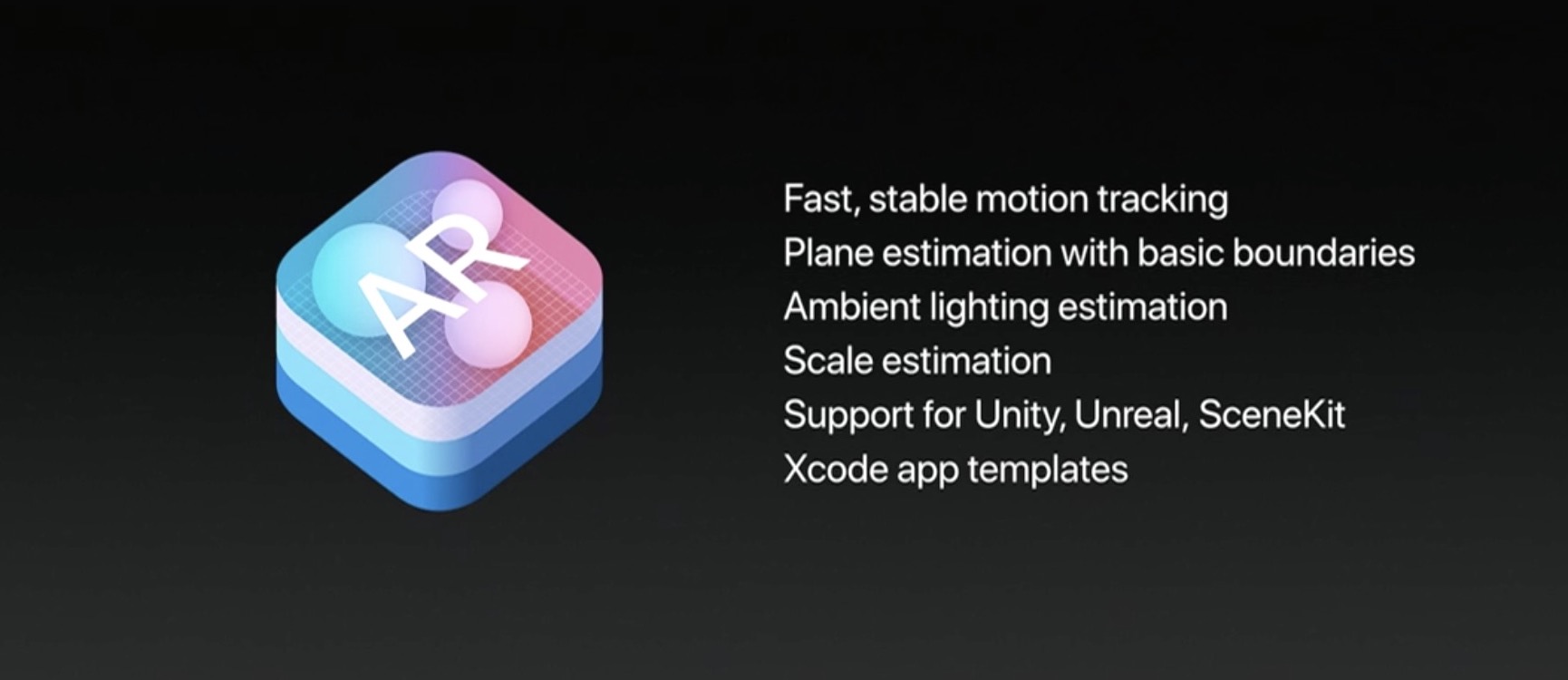

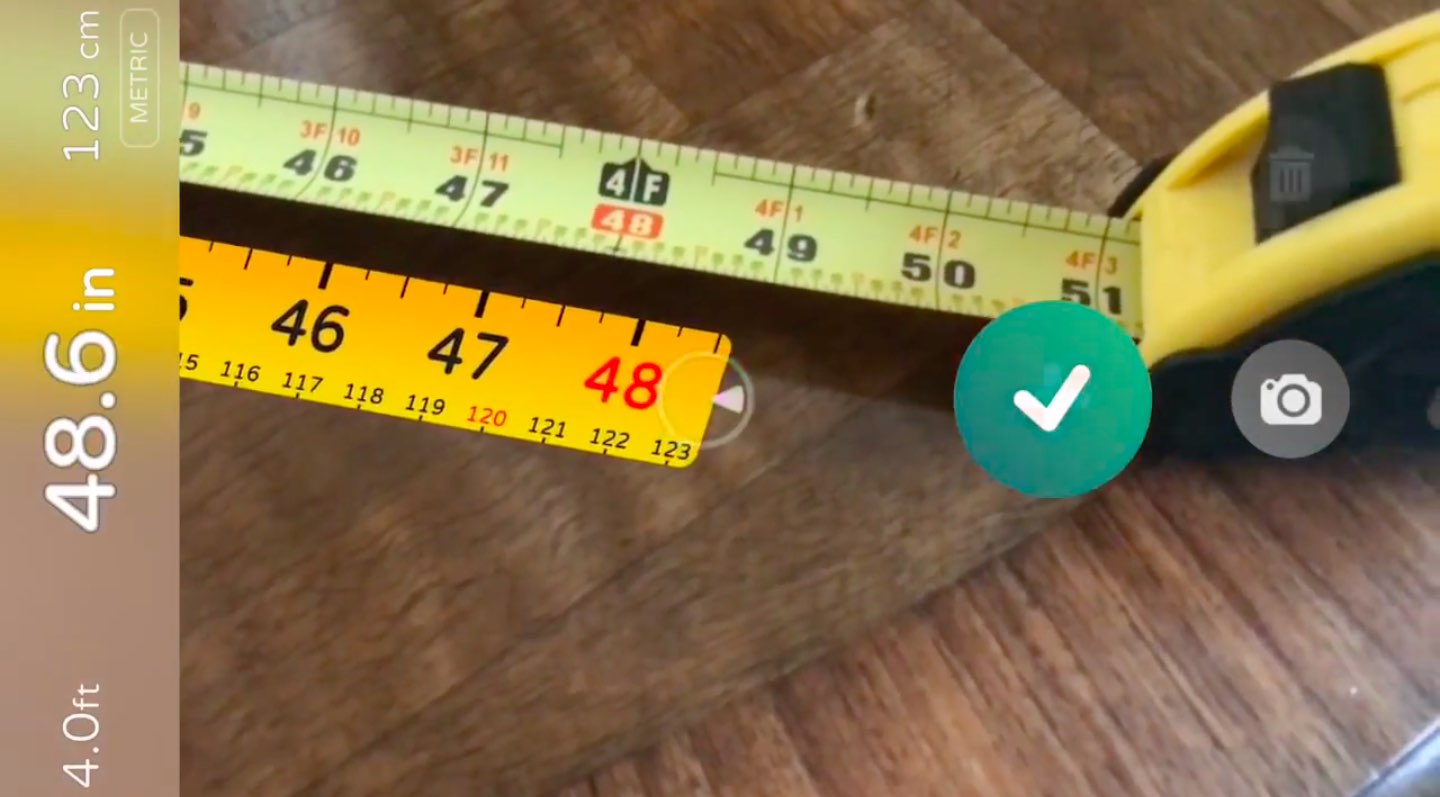

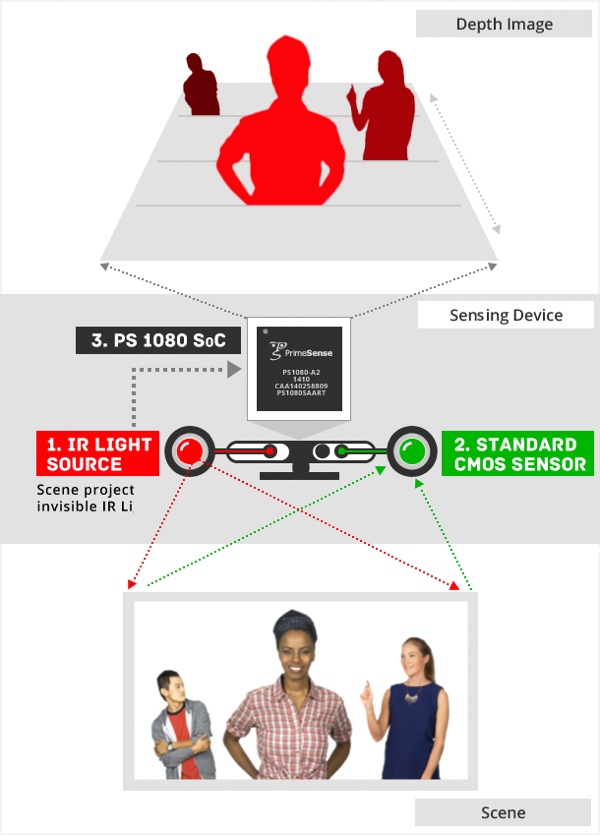

As a quick backgrounder, ARKit analyzes live camera feed in real-time, using computer vision to find horizontal planes in your real world, such as tables and floors. I was able to successfully test the feature on my iPhone 6s running a second beta of iOS 11. Because I don't currently own an iPad, I couldn't test VR mode in Maps on the Apple tablet.

WOW There is an VR mode in Apple maps on iOS 11! It seems to use ARKit for positioning! pic.twitter.com/IdXiGoed26

— Stijn (@StijnDV) June 24, 2017

At any rate, this appears to be the default mode for Flyover now, not a special setting. But don't you worry, there's the option to switch back to the old Flyover mode where you rotate and zoom your Flyover view using touch interactions.

This is honestly one of the coolest features in iOS 11! pic.twitter.com/Zjr6RRkKHk

— Stijn (@StijnDV) June 24, 2017

This is a wicked cool feature and I cannot help but wonder how it might look like when experienced through Apple's rumored digital glasses that, as per Robert Scoble, should use optics by German lens specialist and optical instruments maker Carl Zeiss.

You can actually move around by walking! This is crazy cool! pic.twitter.com/ttR6RaAo7D

— Stijn (@StijnDV) June 24, 2017

Some people couldn't get Maps' new VR mode to work, but I suspect it may have something to do with their hardware. Maps' VR mode uses ARKit, which tracks your actual position in the real world with the camera but requires newer hardware.

Holy Flyover Magic Window batman. pic.twitter.com/Fb8nPeLT5J

— Paul Haddad (@tapbot_paul) June 22, 2017

According to Apple, ARKit runs on the Apple A9 and A10 processors. “These processors deliver breakthrough performance that enables fast scene understanding and lets you build detailed and compelling virtual content on top of real-world scenes,” says the company.

In other words, anything older than iPhone 6s, iPhone 6s Plus, iPhone 7, iPhone 7 Plus, the 9.7-inch iPad (early-2017 model) or iPad Pro won't be able to run iOS 11 Maps' VR mode.

So, is this cool or what?

We'd obviously love to hear your thoughts and predictions regarding iOS 11 Maps' new VR mode and what it might signify in terms of possible new VR hardware from Apple.

Do us a favor and chime in with your thoughts in the comments section.