In this guide, we go over several existing iOS features that use Artificial Intelligence (AI) and Machine Learning (ML) to provide new and powerful capabilities to your iPhone and iPad.

Apple Intelligence (Apple’s term for its in-house AI) was announced alongside iOS 18 but is yet to be released. It’s supposed to bring several features like proofreading your writing, changing your sentence structure, summarizing long texts, surfacing the most urgent emails and notifications at the top, turning your sketch into images, cleaning unneeded objects from a photo background, and so much more.

While Apple Intelligence is certainly going to take AI features for iPhone users to the next level, several existing aspects of iOS and iPadOS are already heavily dependent on Artificial Intelligence and Machine Learning. We will go over the important ones below.

Before you get to it, you must know that AI and ML are closely related, but if you want to understand the differences between them, Google Cloud and Amazon AWS have excellent articles that are really worth your time.

1. Create your Personal Voice

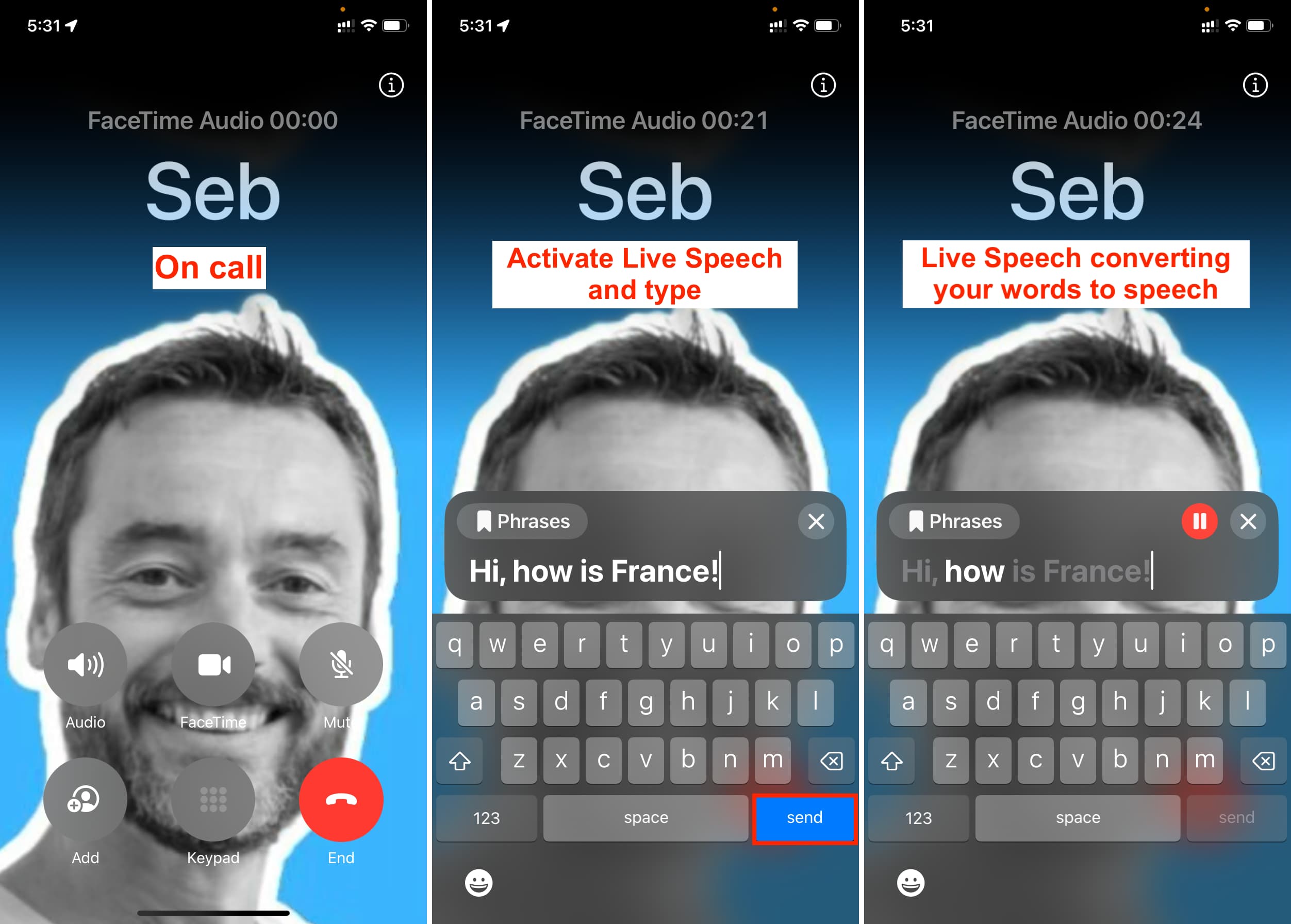

Thanks to Apple’s Machine Learning, people at risk of losing their speech can create an artificial voice that sounds like them.

This synthesized replica voice can be used with the Live Speech feature during FaceTime, phone calls, apps, and in-person communication. The user can simply type what they want to say, and their iPhone will speak those written words in their voice!

2. Control your iPhone with your eyes

You can go through a quick Eye Tracking setup process, and then Apple’s on-device Machine Learning can figure out your eye movements to highlight items and perform a tap just by looking. This is helpful for people who cannot use the touchscreen because of physical disability.

3. On-device Live Captions and transcription

Your iPhone can understand audio and show it on screen in textual form. This can, for example, empower a deaf person to use Live Captions in FaceTime calls or while watching videos. This is also helpful in transcribing voice recordings in Voice Memos and Notes apps.

All the processing is done locally on the device without an Internet connection to ensure utmost privacy.

4. Find images by keyword

The Photos app allows you to find the right image by describing it. For instance, you can type “Pizza,” and it will show all your pictures with pizza in them.

Apple is able to analyze, index, and understand your photo content using advanced intelligence and Machine Learning.

The upcoming Apple Intelligence will take this further by letting you find the right image using long, complex terms like “Sebastien in blue shirt and black tie” or “Pam with stickers on her face.”

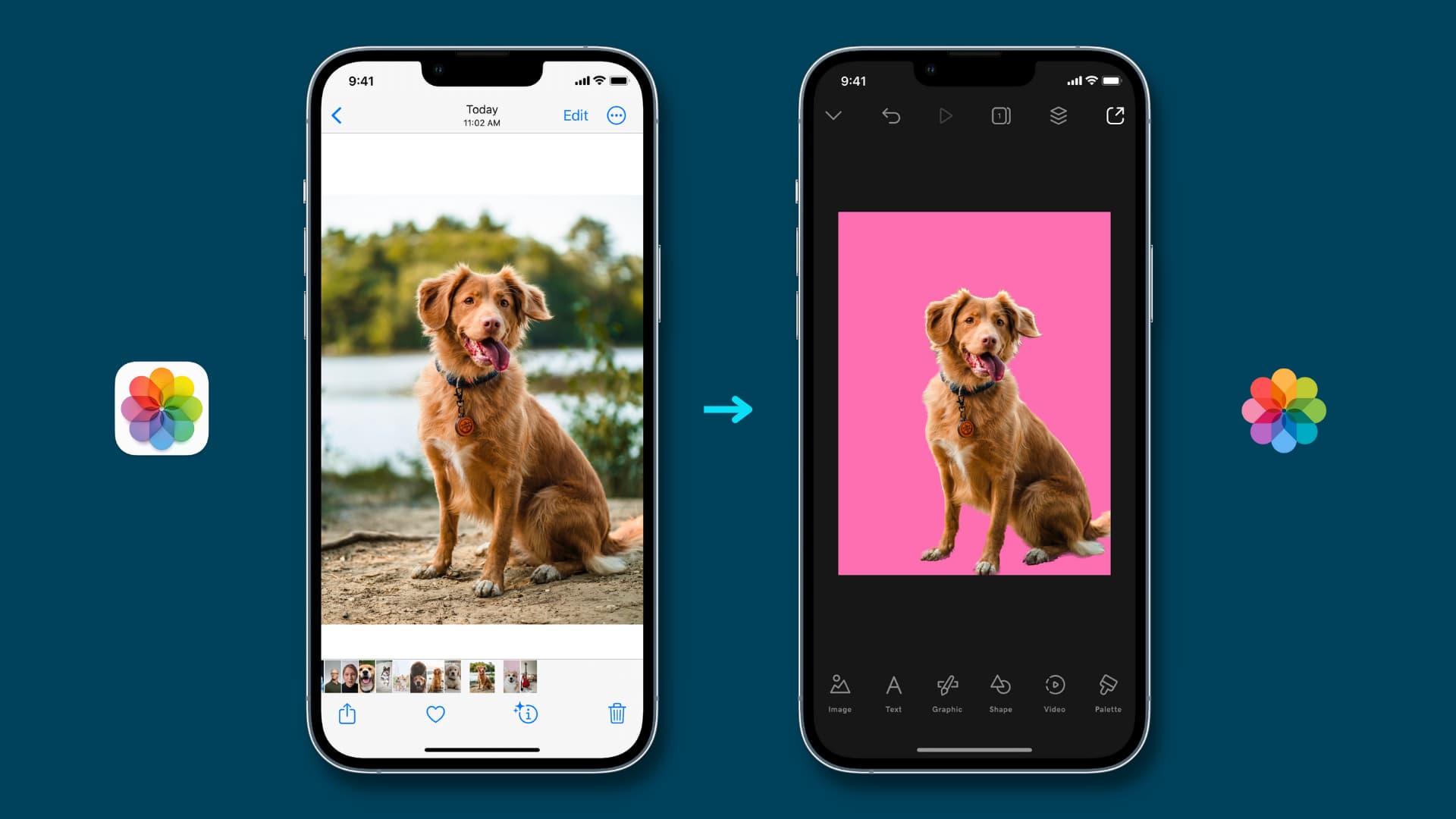

5. Take out the main subject of a picture

Your iPhone and iPad can discern the main subject from the rest of the image. As a result, you can remove your dog from a photo and use it as a sticker. Or you can pull out your face from a picture and paste it into other apps to do things like creating a photo for your passport application.

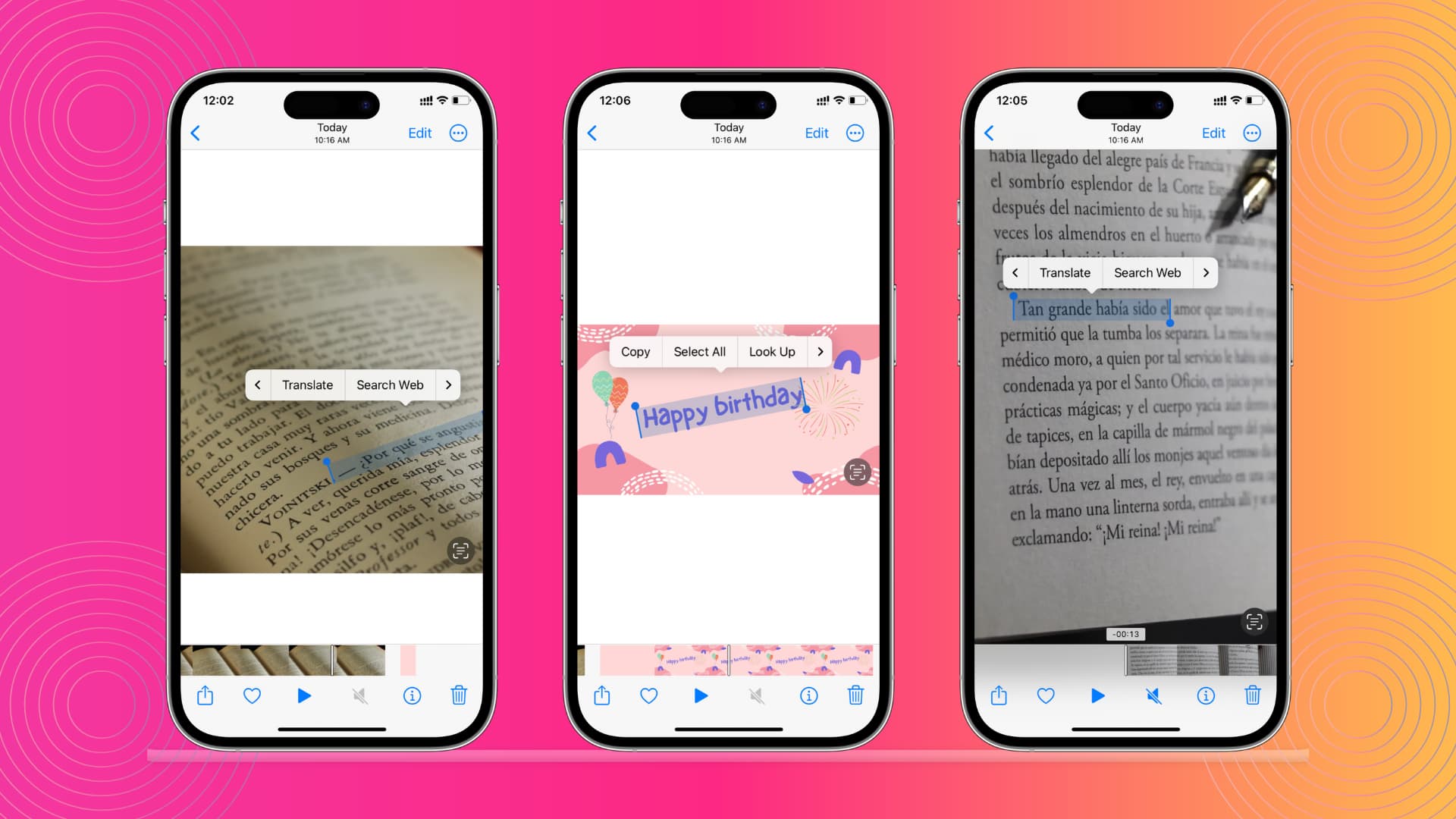

6. Copy text from images and videos

If you have text in a picture or video, you can simply touch it and copy it to the clipboard. Your iPhone is smart enough to recognize text in a picture and lets you take action on it as if it were some text in a Word document.

7. Recognize people and pets

The Photos app can recognize people and pets in your pictures and put all the pictures of a particular person in one spot, which you can access in the People section of the Photos app.

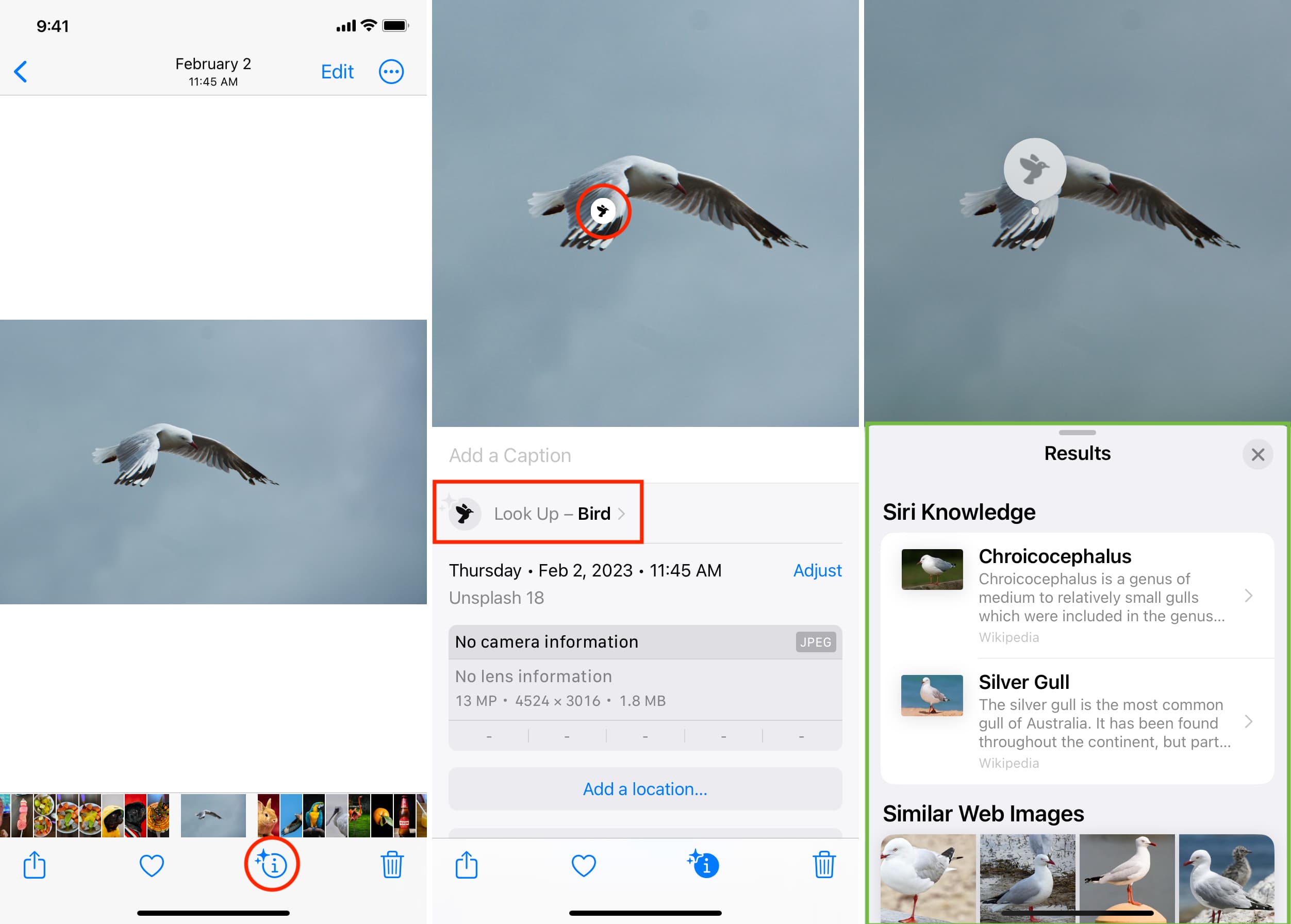

8. Tell you more about food, flowers, plants, artwork, and other stuff

When you open certain pictures in the Photos app, you may see special icons like the information button ⓘ with two stars or a leaf with stars. Tap that icon or swipe up to use the smart Visual Look Up option.

This intelligent feature is handy in getting more information about that thing, but keep in mind that it frequently gets things wrong. So, always double-check.

Your iPhone can even decipher washing symbols on clothing tags and give you more information about them!

9. Automatic filmmaking for you

In addition to recognizing people and pets, your iPhone has the ability to create memories and short videos based on an event, date, trip, and other parameters. You can find these specially curated media in the Memories and Trips sections of your Photos app.

10. Helps you take better pictures

Photographic Styles, Macro Photography, Night Mode, Portrait shots with background blur, and several other aspects of the iPhone Camera app rely heavily on advanced computation and algorithms.

For instance, when you hit the shutter button, your iPhone can instantly capture several photos and then use Machine Learning algorithms to combine the best aspects of all the images, leading to a single image that looks great with all the details, colors, highlights, and brightness.

11. Offers journaling suggestions

The Journal app on iPhone takes into account your location, time, pictures, recent activities, music tastes, and other factors to offer personalized suggestions. These can help you quickly create a diary entry so you can reminisce about the day in the future. All the processing to come up with journaling suggestions happens privately on the device, courtesy of Apple’s advanced on-device Machine Learning.

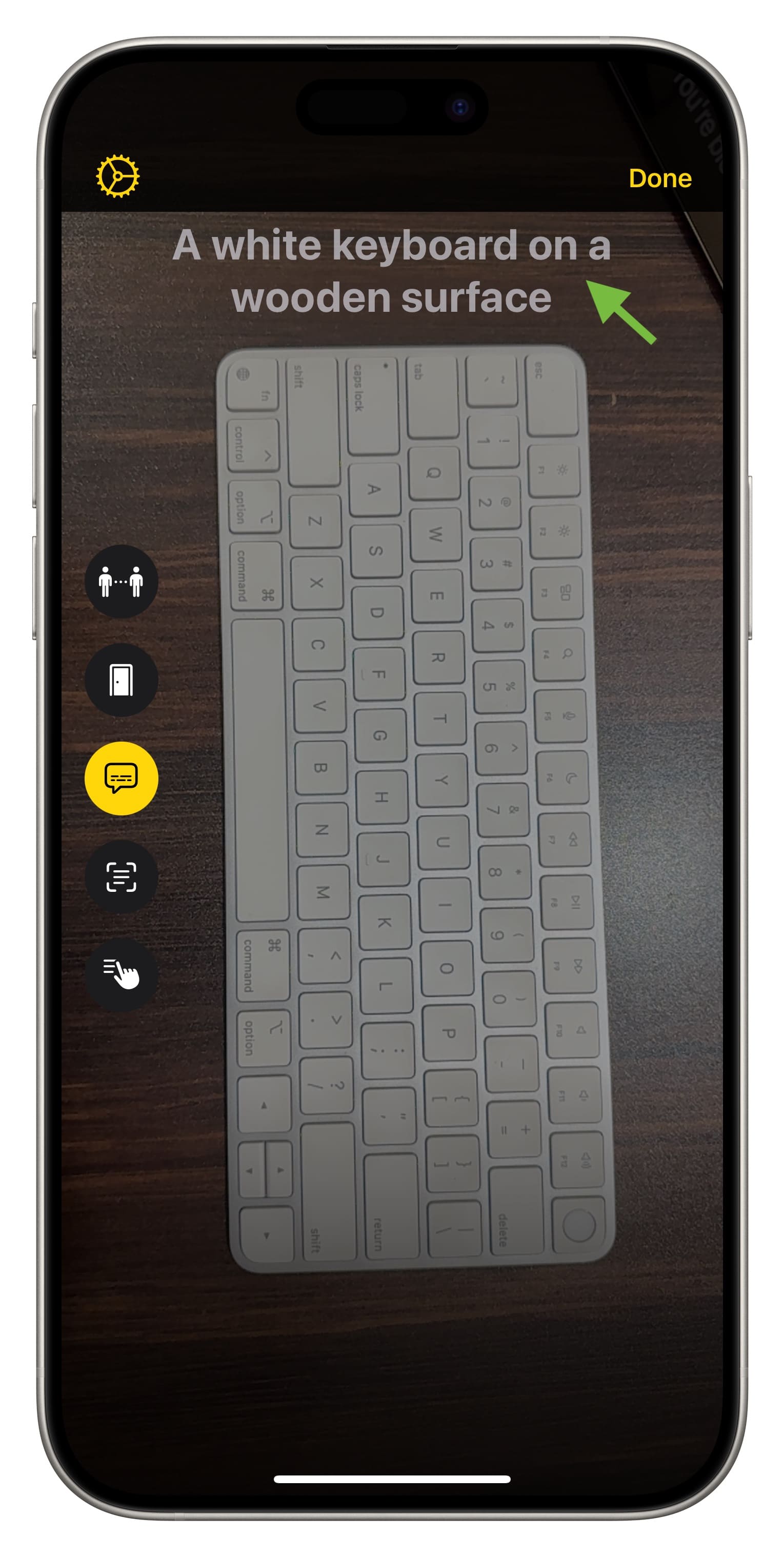

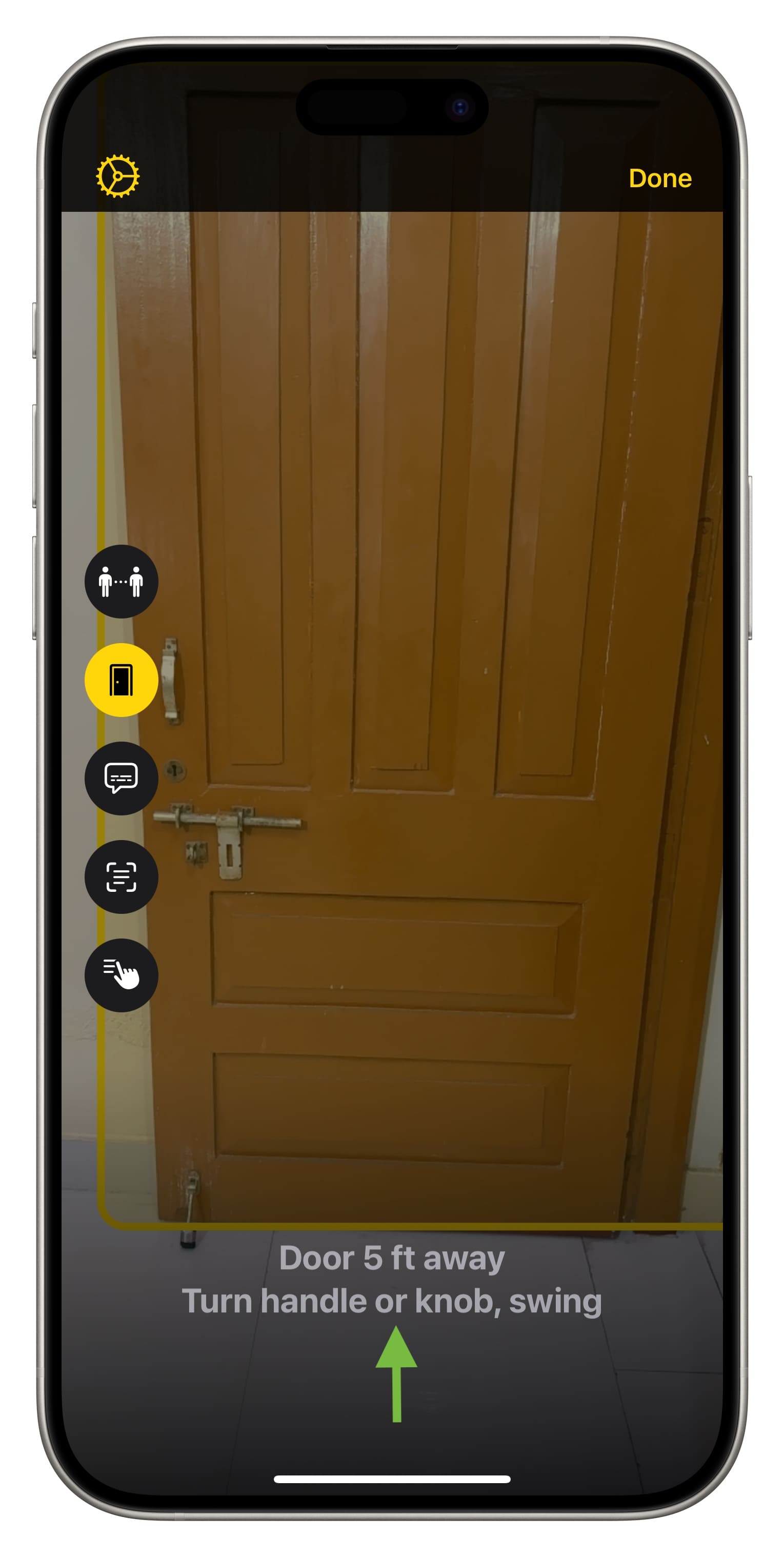

12. Real-time Live Recognition

Thanks to on-device intelligence, Live Recognition and VoiceOver features on your iPhone can detect people, doors, text, furniture, and other objects around you and describe what they are. For instance, when I point it to my work desk, it rightly says, “a white keyboard on a wooden surface.”

It can even explain how to open certain doors!

You can use the live detection mode in the Magnifier app on your iPhone.

13. Relevant app suggestions

Whether it’s App Library, Siri Suggestions widget, Spotlight, or Lock Screen suggestions, iOS uses Machine Learning to understand your usage patterns and suggest relevant apps and actions at the right spot and at the right time.

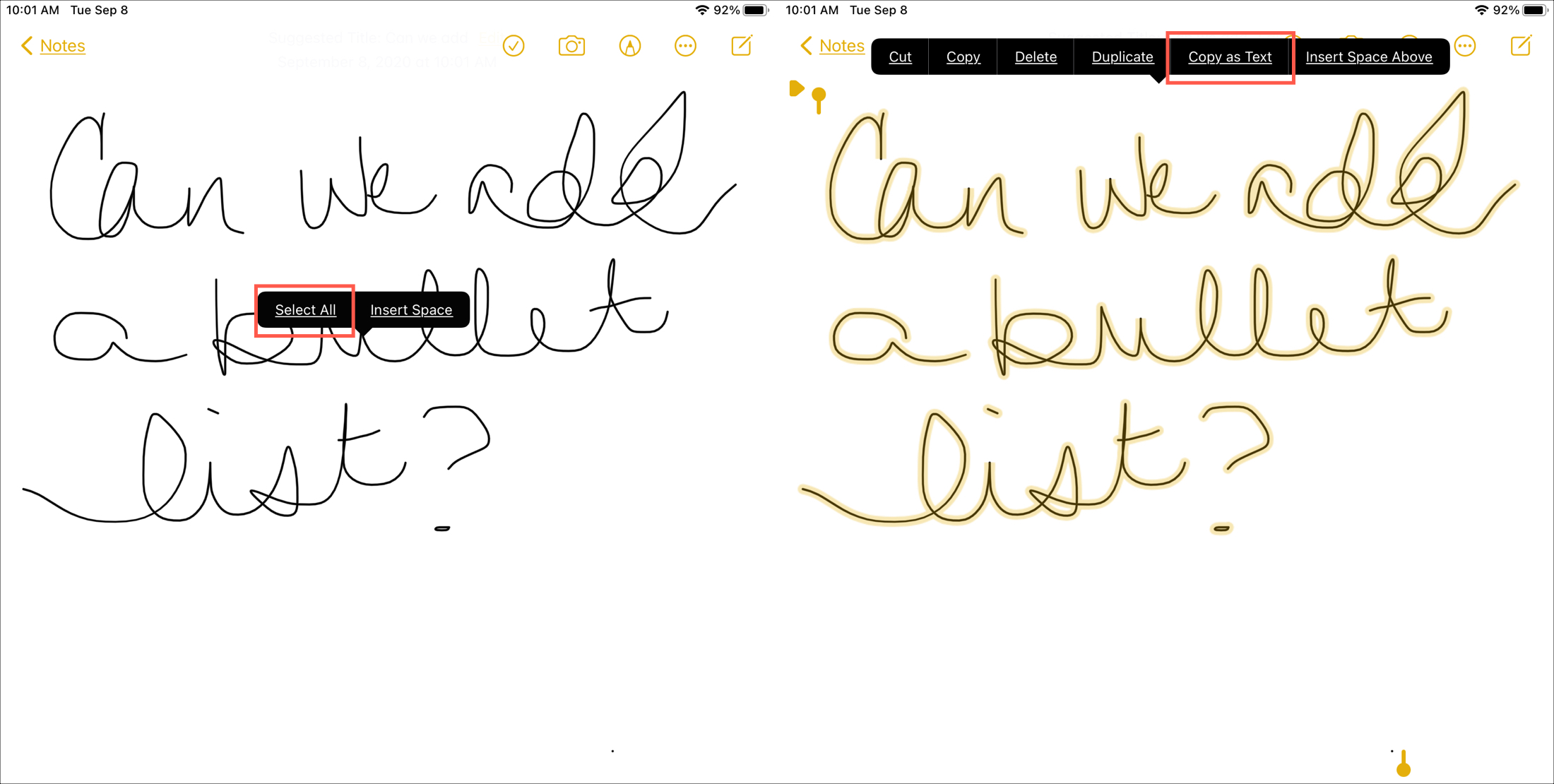

14. Palm rejection when using Apple Pencil

It’s obvious that your palm will rest on the screen when you are writing or drawing with the Apple Pencil. iPadOS uses Machine Learning to figure out the difference between your finger touch, palm rest, and pencil inputs. So, when you’re drawing with a Pencil, the software is smart enough to ignore your palm touches.

Similarly, Scribble with Apple Pencil and converting handwriting to typed text or copying them also use advanced learning algorithms to make that happen.

15. Optimize battery charging and longevity

Even something as simple as charging your device is not bereft of Machine Learning. As you use your device, your iPhone understands your charging and usage patterns and can make changes to extend its lifespan.

For instance, if you plug in your iPhone for charging every day before going to bed, your iPhone will pause charging after reaching 80%. It will resume charging at the right time so that when you wake up, your phone is at 100%. This ensures your iPhone’s battery is not at full 100% charge for long hours at night, thus helping extend its health.

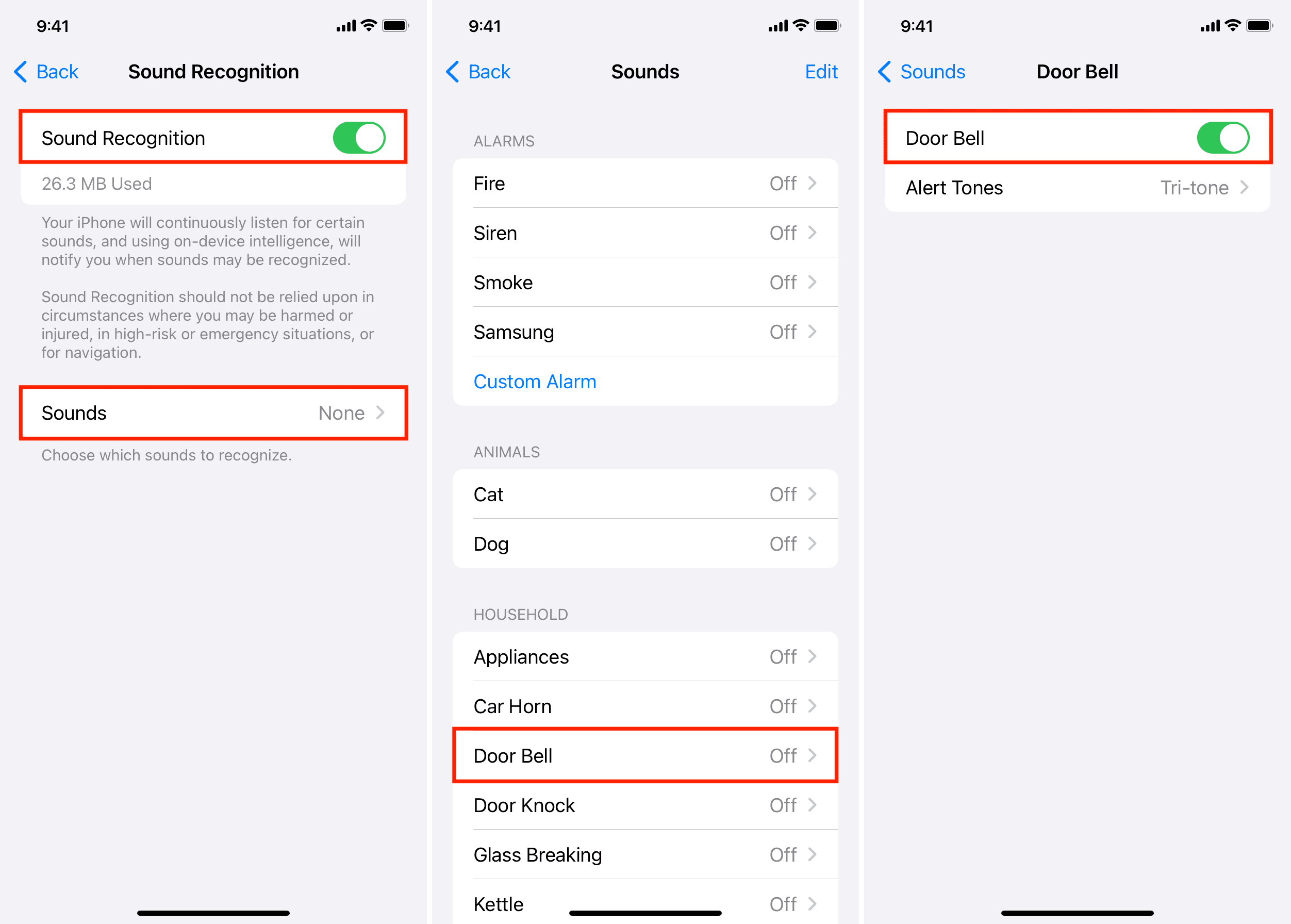

16. Sound recognition

Your iPhone can use on-device intelligence to listen to various sounds, such as fire alarm, door knock, glass breaking, appliances, and more, and notify you about them. A person who cannot hear can use Sound Recognition to know if someone is ringing their doorbell, for instance.

17. Facial recognition

Face ID already uses a complex system to understand your facial features for authentication.

Furthermore, the people recognition abilities of the Photos app can be used with HomeKit cameras to tag your family and friends, helping you know who’s at the door.

In addition to the features mentioned above, several other things, such as keyboard predictions, emoji suggestions, wallpaper depth effect on the Lock Screen, suggested wallpapers with colored backgrounds, offline Siri, and more, are also dependent on Machine Learning.

By now, you might be thinking that almost everything on the modern iPhone is touched by ML and AI, and you wouldn’t be wrong.

Apple’s Senior Vice President for AI and ML, John Giannandrea, said a few years back that “Machine Learning will transform every part of the Apple experience in the coming years,” and it’s clear that his words are coming true.

On a related note: System requirements for Apple Intelligence